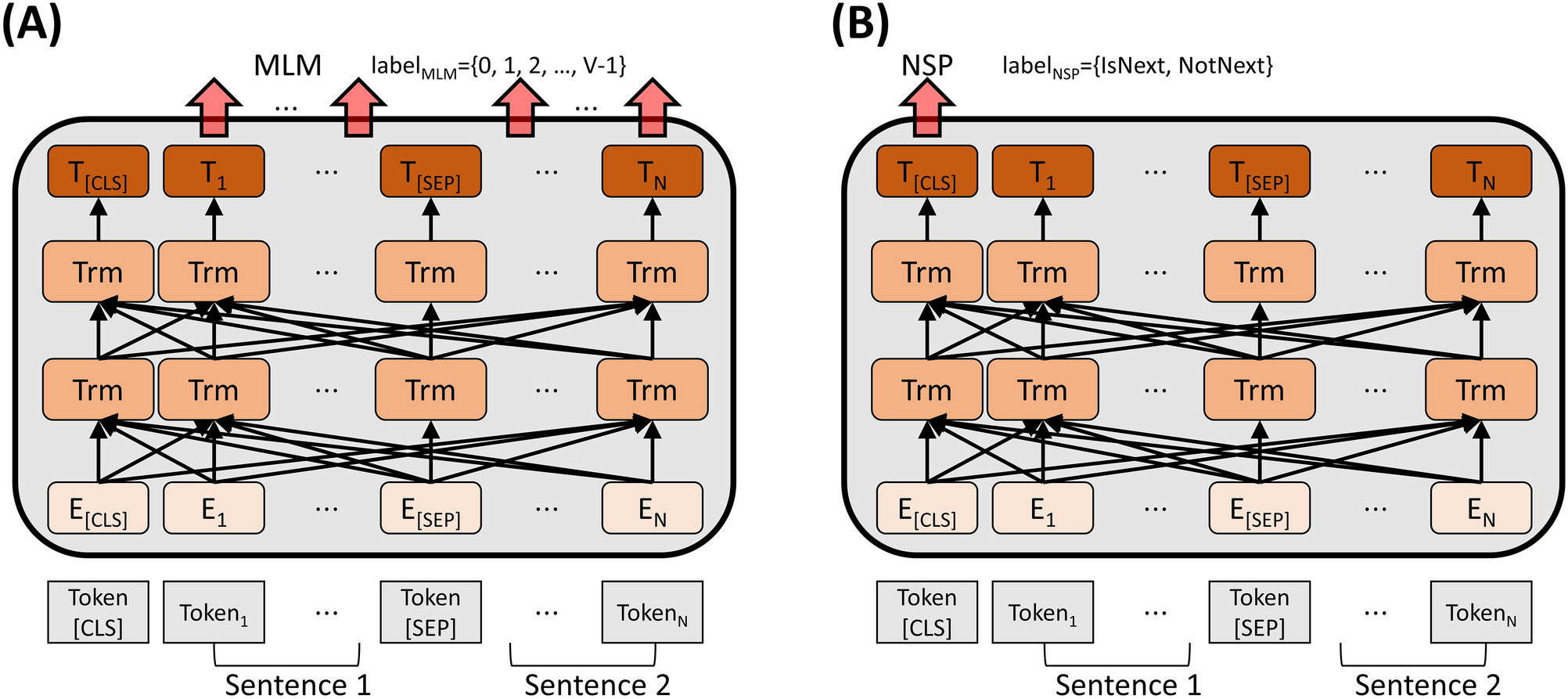

The Masked Language Modeling (MLM) objective as basis for training

Por um escritor misterioso

Last updated 22 setembro 2024

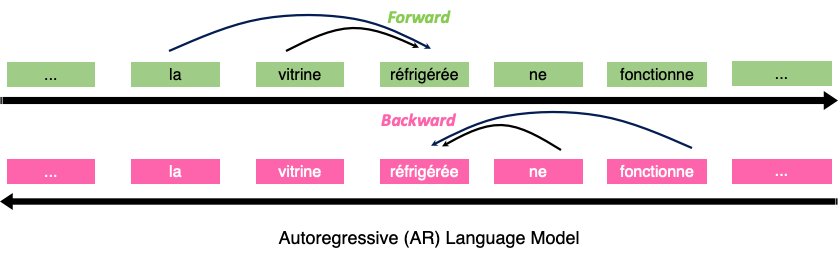

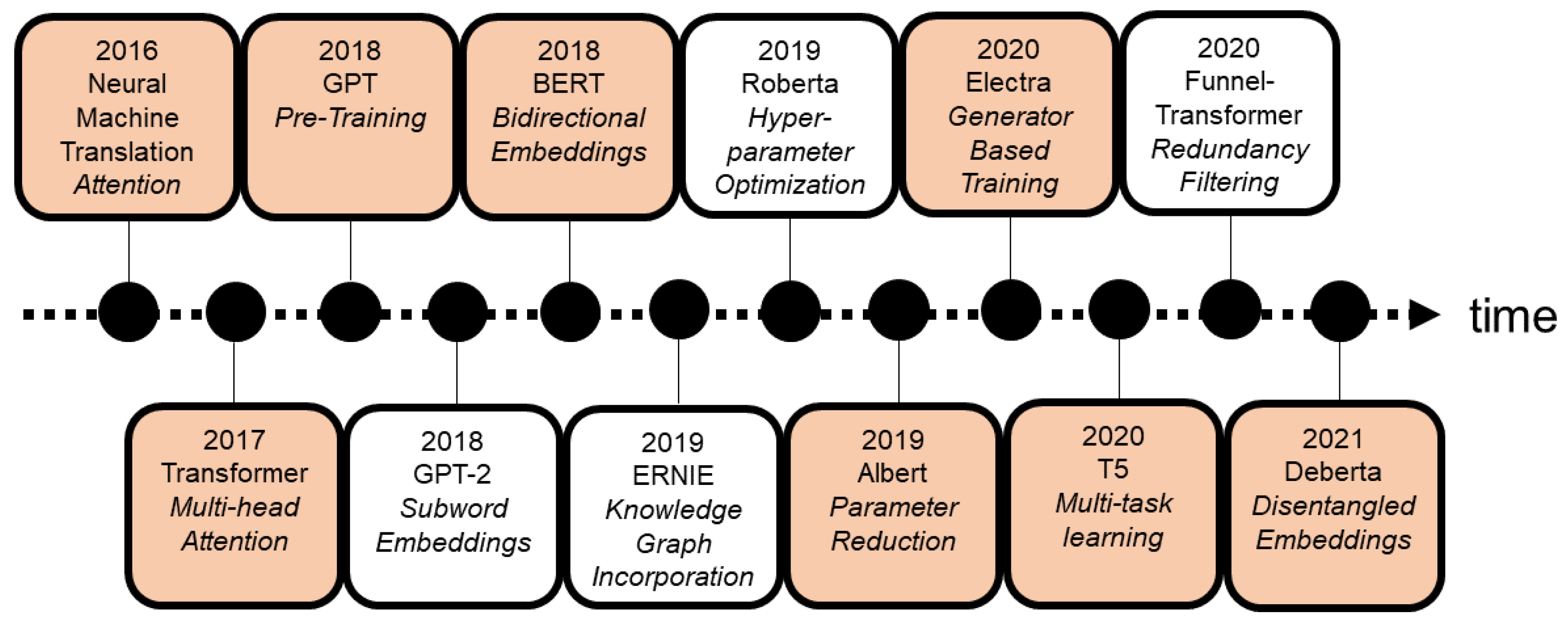

Understanding Language using XLNet with autoregressive pre

Decoding the Mechanics of Masked and Casual Language Models

The Masked Language Modeling (MLM) objective as basis for training

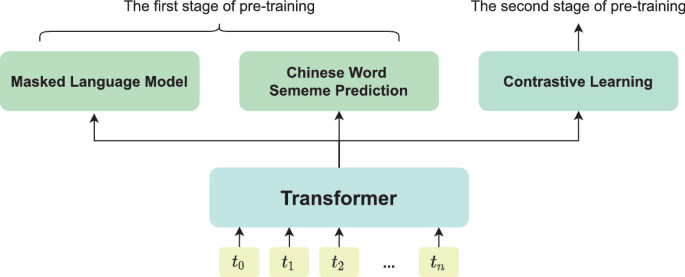

K-DLM: A Domain-Adaptive Language Model Pre-Training Framework

The Masked Language Modeling (MLM) objective as basis for training

The Masked Language Modeling (MLM) objective as basis for training

The Masked Language Modeling (MLM) objective as basis for training

Masked Language Modeling (MLM) in BERT pretraining explained

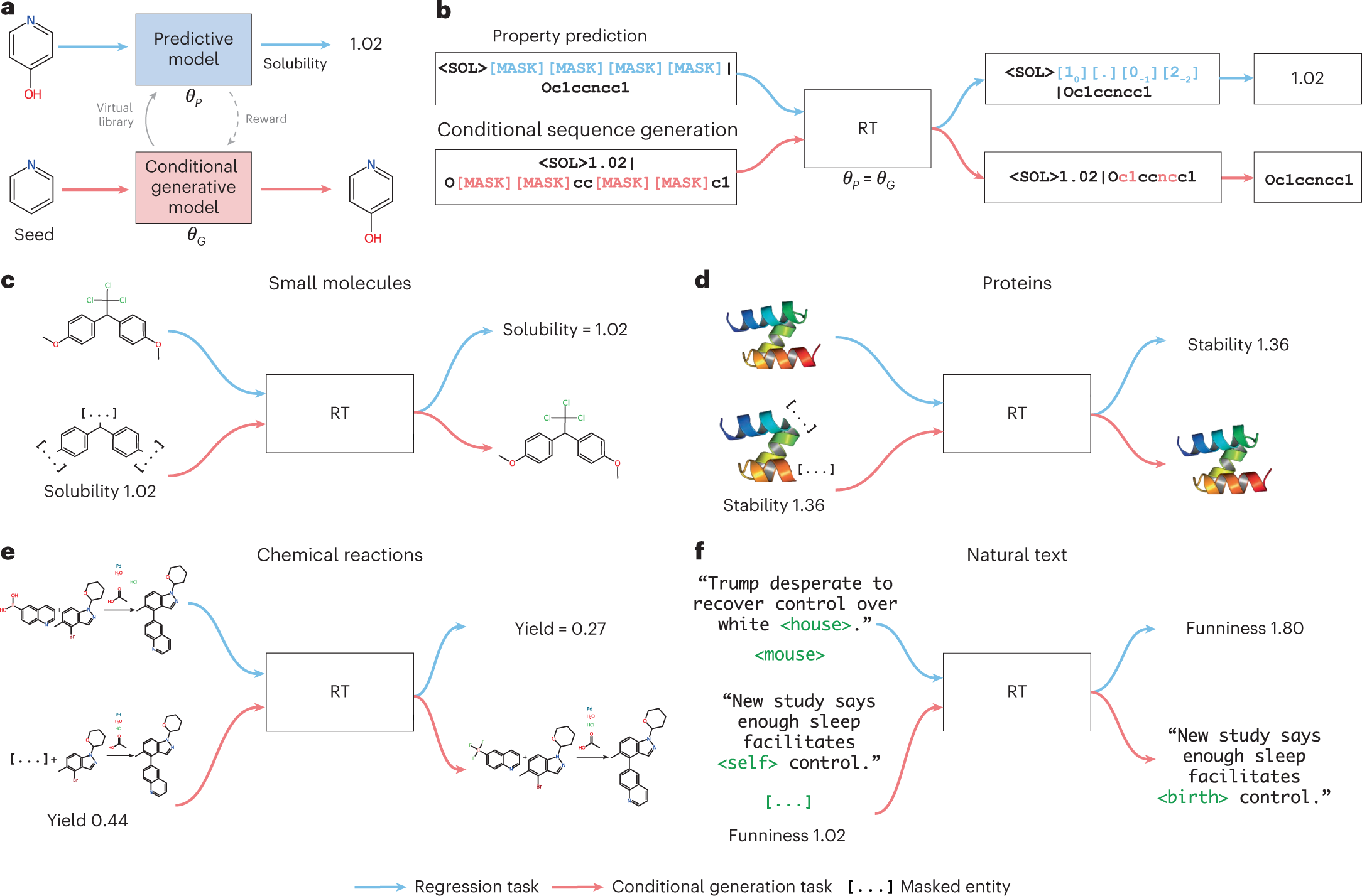

Regression Transformer enables concurrent sequence regression and

Researchers From China Propose A New Pre-trained Language Model

Entropy, Free Full-Text

What Language Model Architecture and Pretraining Objective Work

A pre-trained BERT for Korean medical natural language processing

Pre-trained models: Past, present and future - ScienceDirect

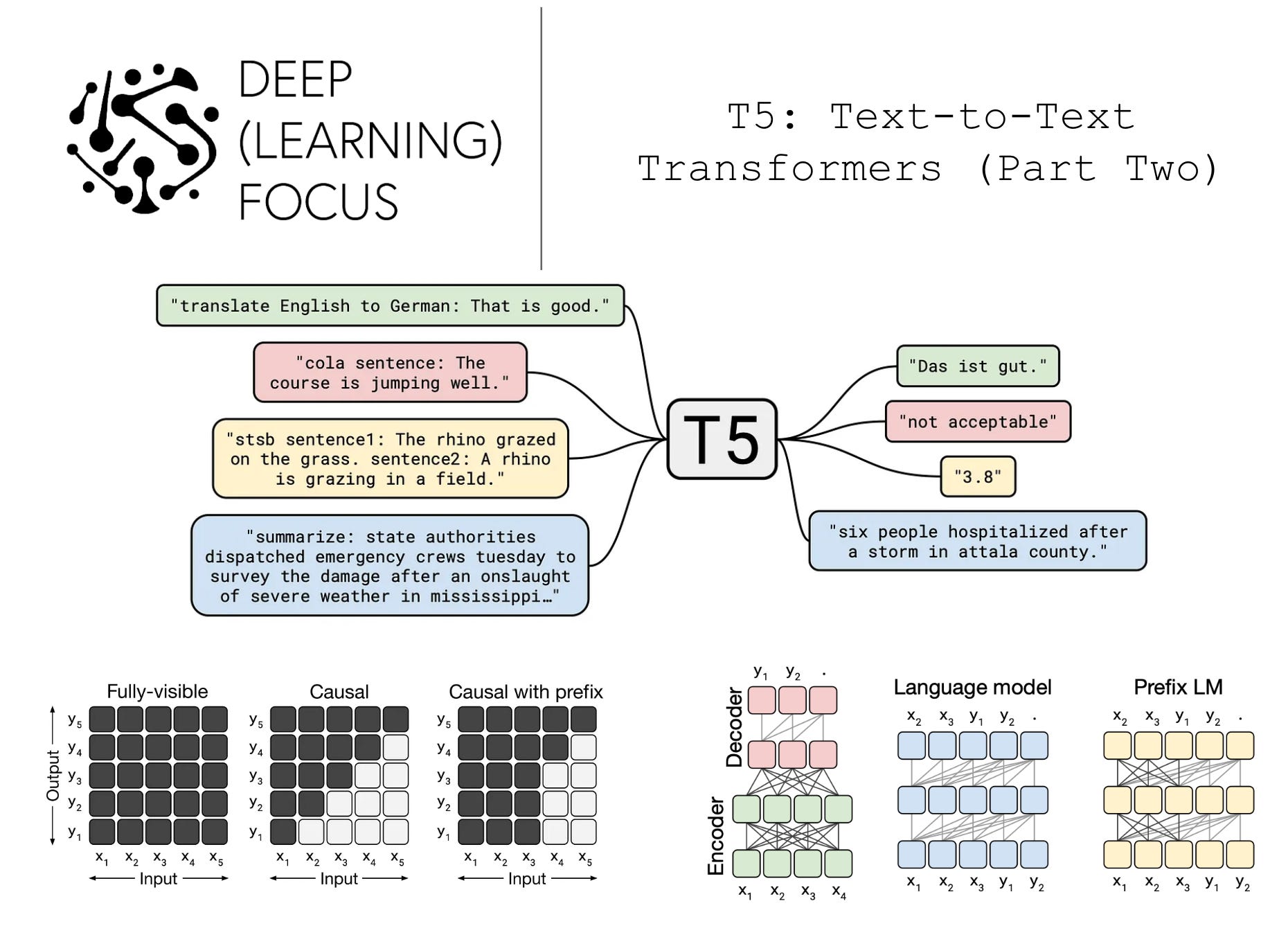

T5: Text-to-Text Transformers (Part Two)

Recomendado para você

-

Tay Training: Personal Online – Taymila Miranda22 setembro 2024

Tay Training: Personal Online – Taymila Miranda22 setembro 2024 -

A Man Lifting Dumbbell while Wearing Virtual Goggles · Free Stock22 setembro 2024

A Man Lifting Dumbbell while Wearing Virtual Goggles · Free Stock22 setembro 2024 -

Potty Training Chart Kid Reward Jar Instant Download Toilet22 setembro 2024

Potty Training Chart Kid Reward Jar Instant Download Toilet22 setembro 2024 -

Maryland Air Guard, Estonian Partners Focus on Cyber Defense > Air22 setembro 2024

-

Percentage of agencies offering specific psychotherapies, by age22 setembro 2024

Percentage of agencies offering specific psychotherapies, by age22 setembro 2024 -

Pilot Training Next begins third iteration next month > Air22 setembro 2024

-

Presynaptic Dysfunction in Neurons Derived from Tay–Sachs iPSCs22 setembro 2024

Presynaptic Dysfunction in Neurons Derived from Tay–Sachs iPSCs22 setembro 2024 -

Training Facility Norms and Standard Equipment Lists: Volume 222 setembro 2024

Training Facility Norms and Standard Equipment Lists: Volume 222 setembro 2024 -

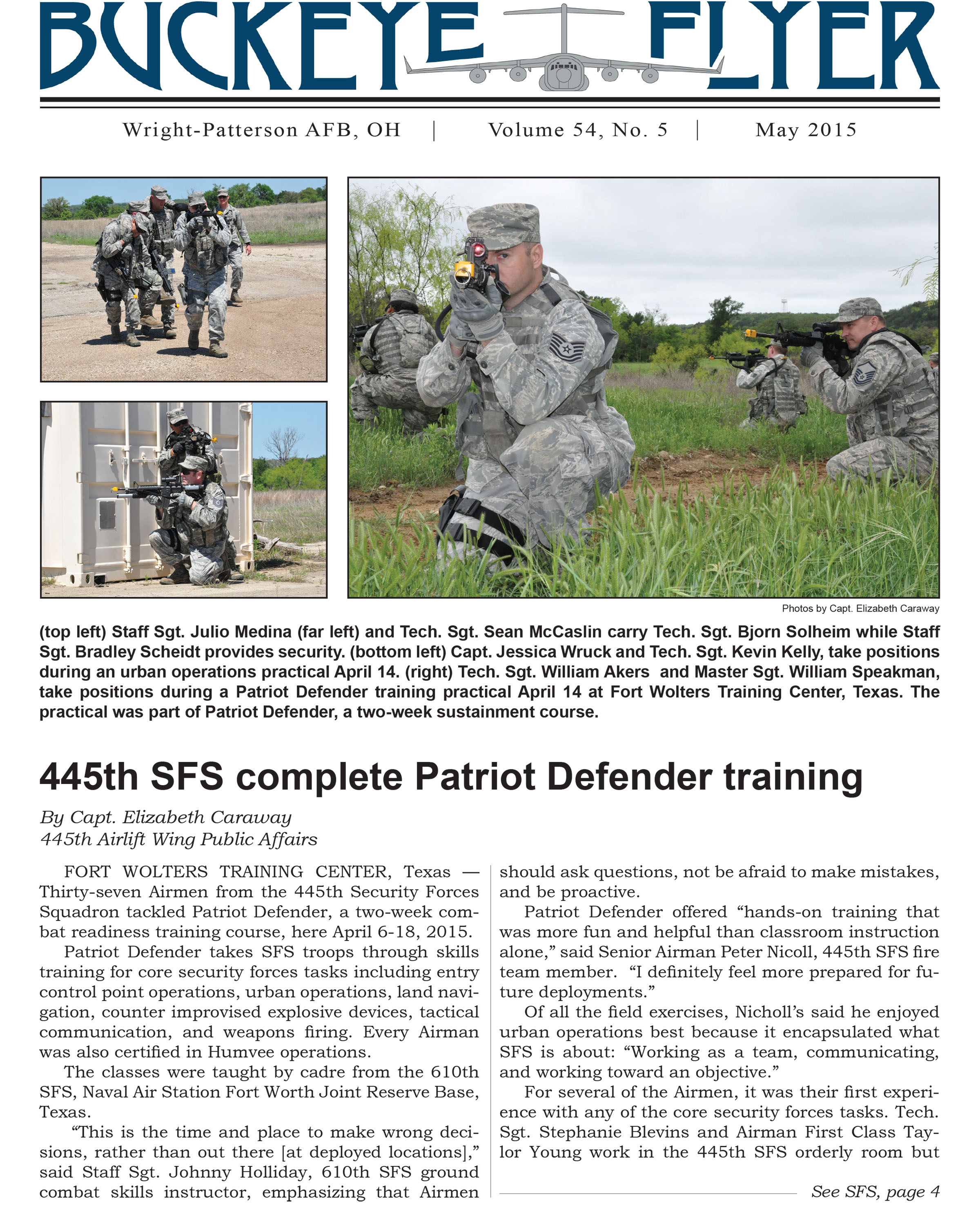

May issue of the Buckeye Flyer now available > 445th Airlift Wing22 setembro 2024

-

TAY CONTI, ANNA JAY AND THUNDER ROSA AEW WRESTLING Unsigned 8x1022 setembro 2024

TAY CONTI, ANNA JAY AND THUNDER ROSA AEW WRESTLING Unsigned 8x1022 setembro 2024

você pode gostar

-

Already preparing for Halloween…22 setembro 2024

-

Brasil perde para a Bélgica e está fora da Copa do Mundo22 setembro 2024

Brasil perde para a Bélgica e está fora da Copa do Mundo22 setembro 2024 -

Club Cerro Porteño – Match Report vs. Club Nacional Asunción 06/0322 setembro 2024

Club Cerro Porteño – Match Report vs. Club Nacional Asunción 06/0322 setembro 2024 -

The Eminence in Shadow Season 2 Episode 9 Trailer22 setembro 2024

-

Shrek E Burro X Monsters Inc. Fronha 20x30 50*75 Sofá Quarto Medo Shitpost Meme Deus Shrek E Burro Shronkey Shrek - Fronhas - AliExpress22 setembro 2024

Shrek E Burro X Monsters Inc. Fronha 20x30 50*75 Sofá Quarto Medo Shitpost Meme Deus Shrek E Burro Shronkey Shrek - Fronhas - AliExpress22 setembro 2024 -

Sonic Frontiers teria reaproveitado level design de jogo antigo22 setembro 2024

Sonic Frontiers teria reaproveitado level design de jogo antigo22 setembro 2024 -

Nanticoke, Lenape tribal status recognized in First State, struggle continues in New Jersey22 setembro 2024

Nanticoke, Lenape tribal status recognized in First State, struggle continues in New Jersey22 setembro 2024 -

Palkia Forma Origem V-ASTRO, Pokémon22 setembro 2024

Palkia Forma Origem V-ASTRO, Pokémon22 setembro 2024 -

Gear Review by Kelly: Arc'teryx Alpha SL Hybrid Jacket in Long22 setembro 2024

Gear Review by Kelly: Arc'teryx Alpha SL Hybrid Jacket in Long22 setembro 2024 -

Game Dimensions, Tekken 8, Kazuya Mishima Collectible Action Figure22 setembro 2024

Game Dimensions, Tekken 8, Kazuya Mishima Collectible Action Figure22 setembro 2024